MLOps project - part 4a: Machine Learning Model Monitoring

Monitoring deployed machine learning models in production.

Note: The majority of the information in this blog post comes from the documentations and talks of the tools' authors and creators. I simply put them together. The details of each company's solutions depend on the information contained in their documentation and online discussions.

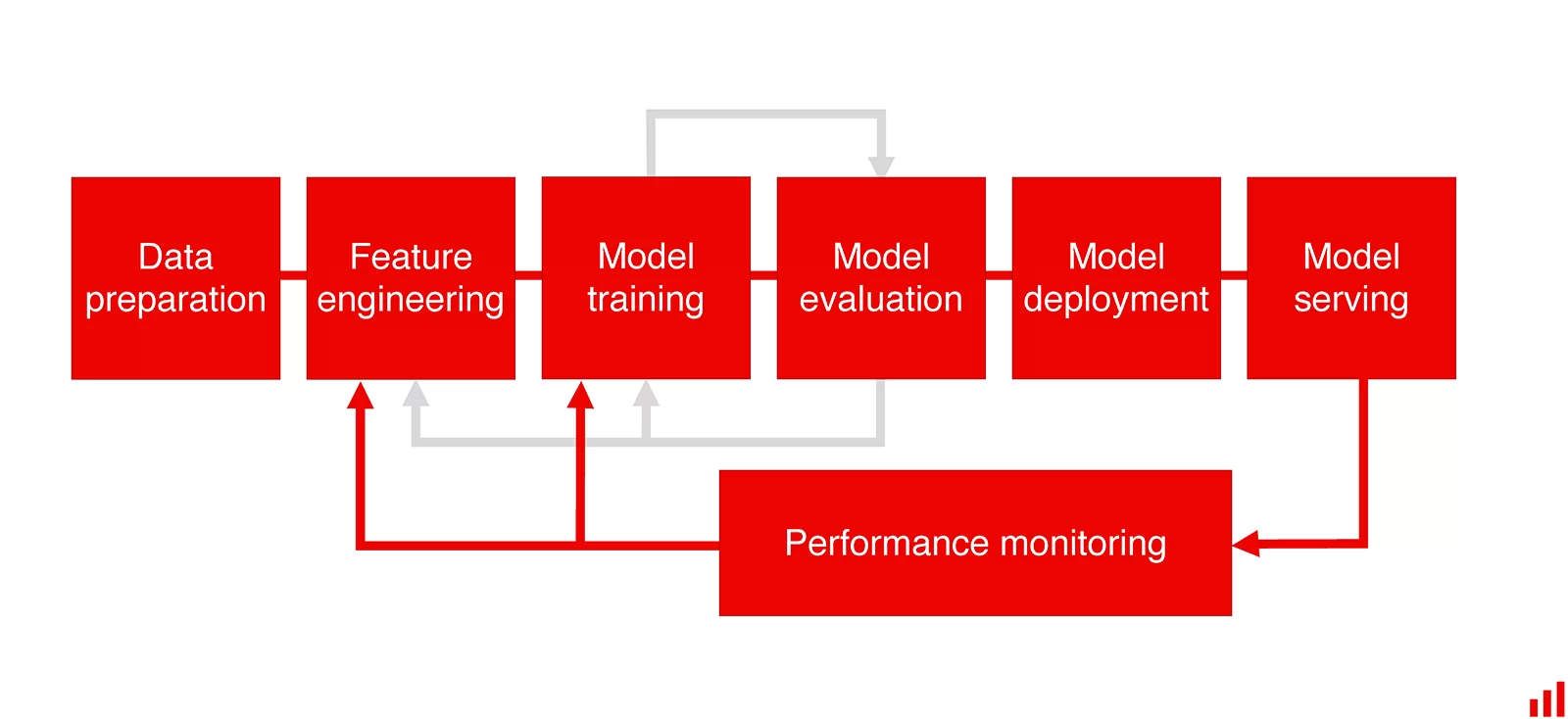

After several years of research and development in laboratories, machine learning models are increasingly entering production. The topic of deploying the designed machine learning models into production will be covered in a subsequent post. However, a machine learning model's existence does not end upon deployment. Monitoring the model and ensuring that it fulfills its responsibilities emerges after deployment.

In this article, we will discuss different monitoring solutions for machine learning models in production. We will analyze how various solutions can assist us in determining whether the model performs as expected and, if not, what should be done. Let's get started!

First, let's see what is machine learning model monitoring?

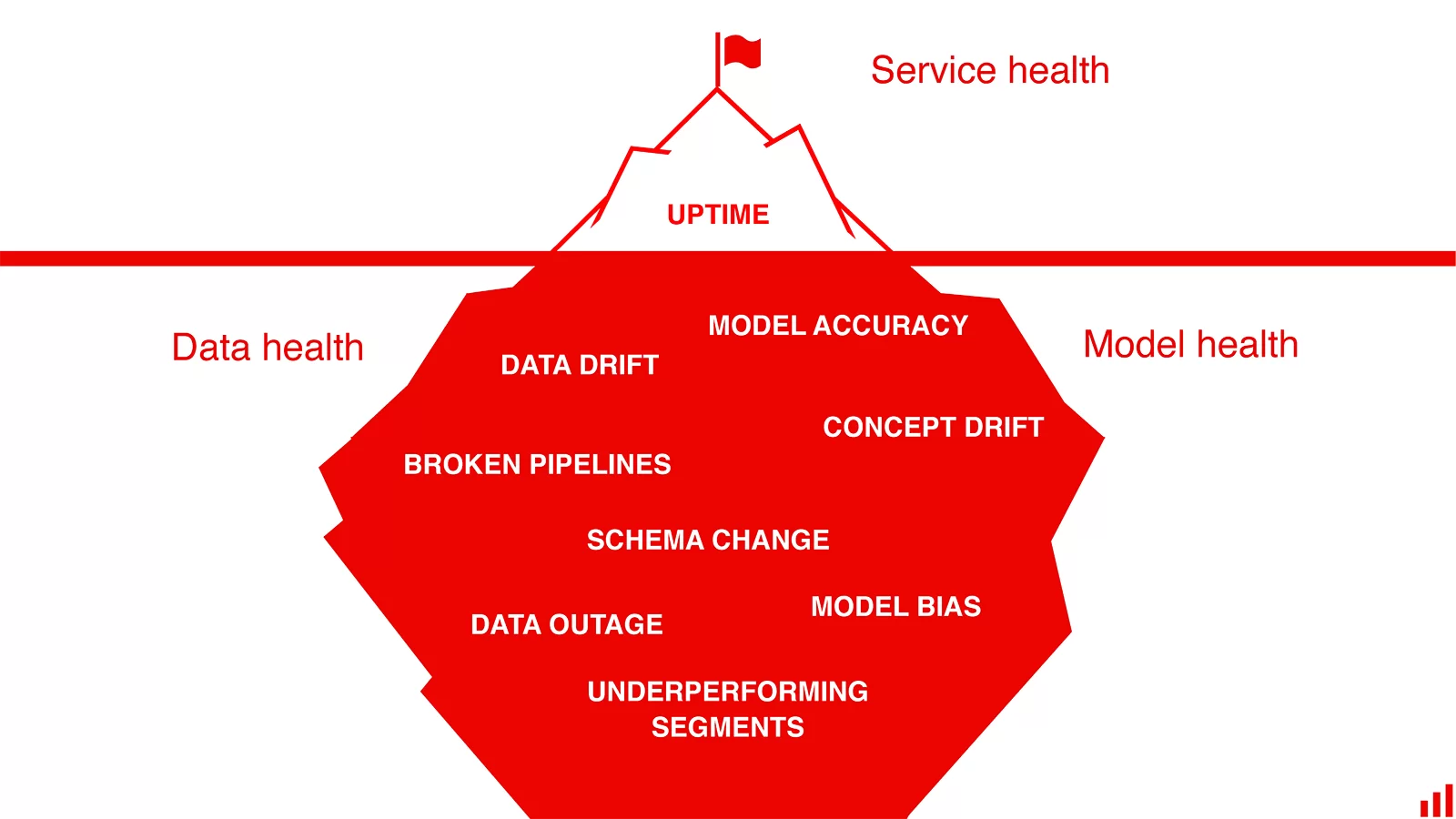

Machine learning model monitoring is a practice of tracking and analyzing production model performance to ensure acceptable quality as defined by the use case. It provides early warnings on performance issues and helps diagnose their root cause to debug and resolve. [source] Many things can go wrong with data and model in a machine learning service that are not detectable by old and conventional software monitoring tools. Issues such as data pipeline, data quality, inference service latency, model accuracy, and many more. Checking business metrics and KPIs may be too late to figure out the machine learning service problems. In addition, failure to discover these issues proactively can have a major negative impact on corporate performance and credibility to the end-user. Therefore, detecting these kinds of problems and acting on time would be very important.

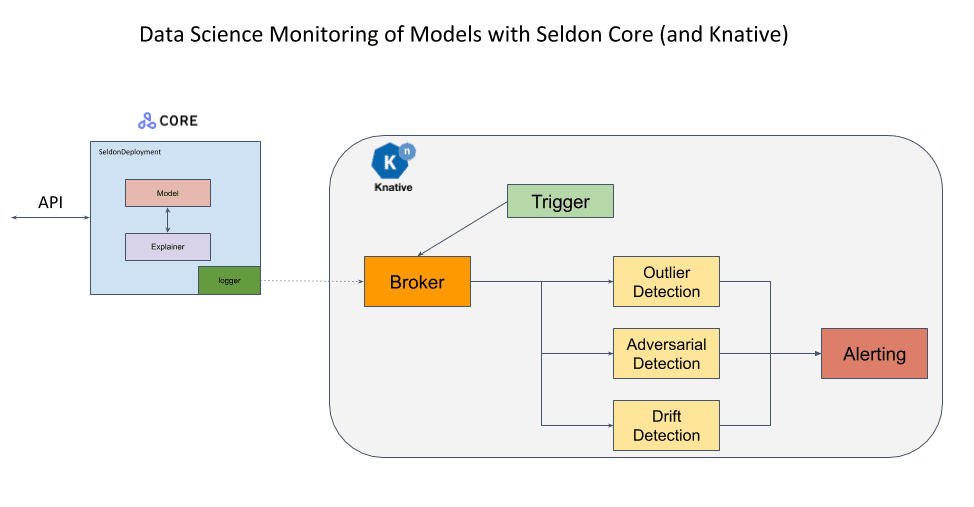

I can't recommend a website more than the EvidentlyAI blog posts to learn about machine learning model monitoring concept. I also found This blog post by Aporia and this one by Seldon useful.

All models degrade. There are different categories of the problems that can happen to a machine learning service.

- Sometimes, poor data quality, broken pipelines, or technical bugs cause a performance drop.

- Sometimes data drift, which is the change in data distributions -> The model performs worse on unknown data regions.

- And sometimes it is concept drift, which is the change in relationships -> The world has changed, and the model needs an update. It can be gradual (expected), sudden (you get it), and recurring (seasonal).

For more details, read the references I mentioned above or at the end of the blog post. Let's go for solutions and tools for ML model monitoring.

Evidently AI

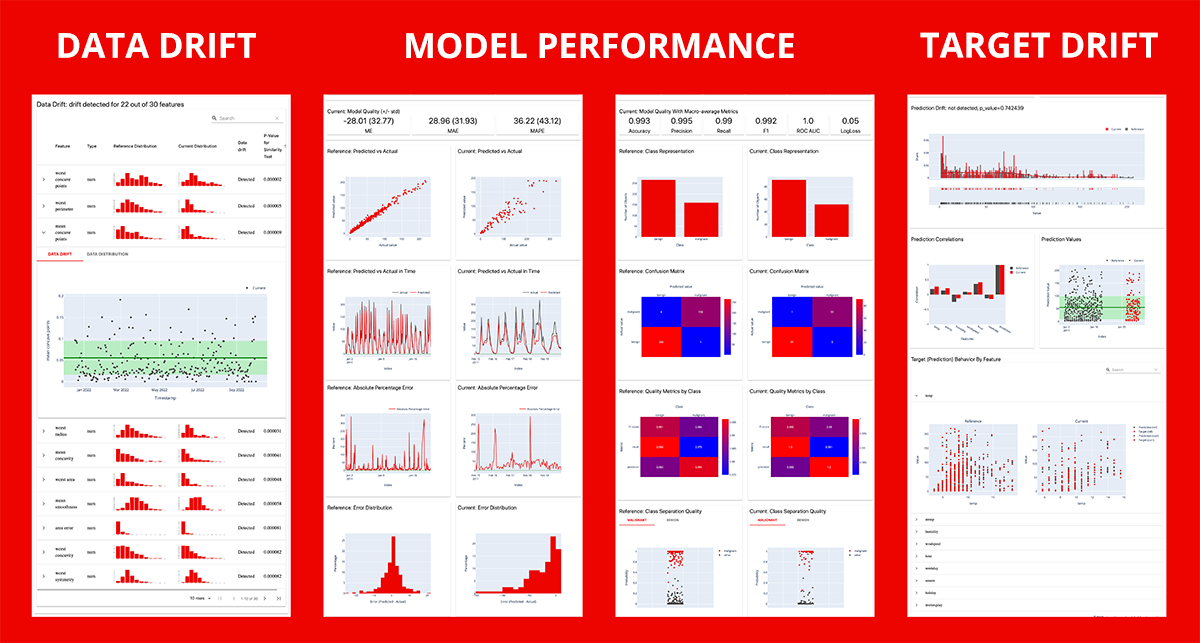

Evidentlyai is one of the hottest startups working in this topic. Based on their documentations, Evidently generates interactive dashboards from pandas DataFrame or csv files, which can be used for model evaluation, debugging and documentation.

Each report covers a particular aspect of the model performance. Currently 7 pre-built reports are available:

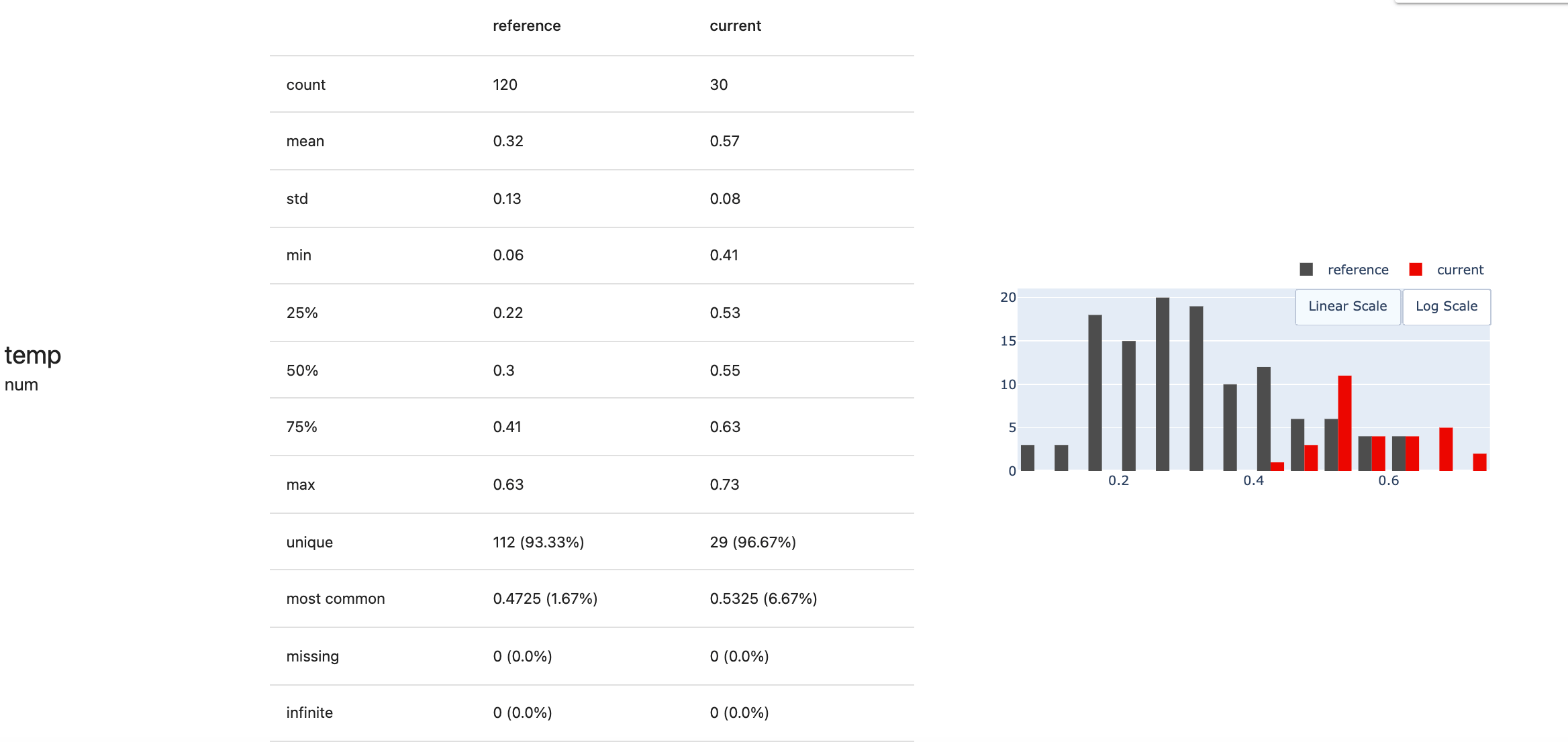

- Data Drift: Detects changes in the input feature distribution. You'll require two datasets. The benchmark dataset is the reference set. By comparing the current production data to the reference data, they analyze the change. To estimate the data drift Evidently c ompares the distributions of each characteristic in the two datasets. Evidently employs statistical tests to determine if the distribution has significantly shifted.

- Data Quality: Provides detailed feature statistics and behavior overview. Additionally, it can compare any two datasets. It can be utilized to compare train and test data, reference and current data, or two subsets of a single dataset (e.g., customers in different regions).

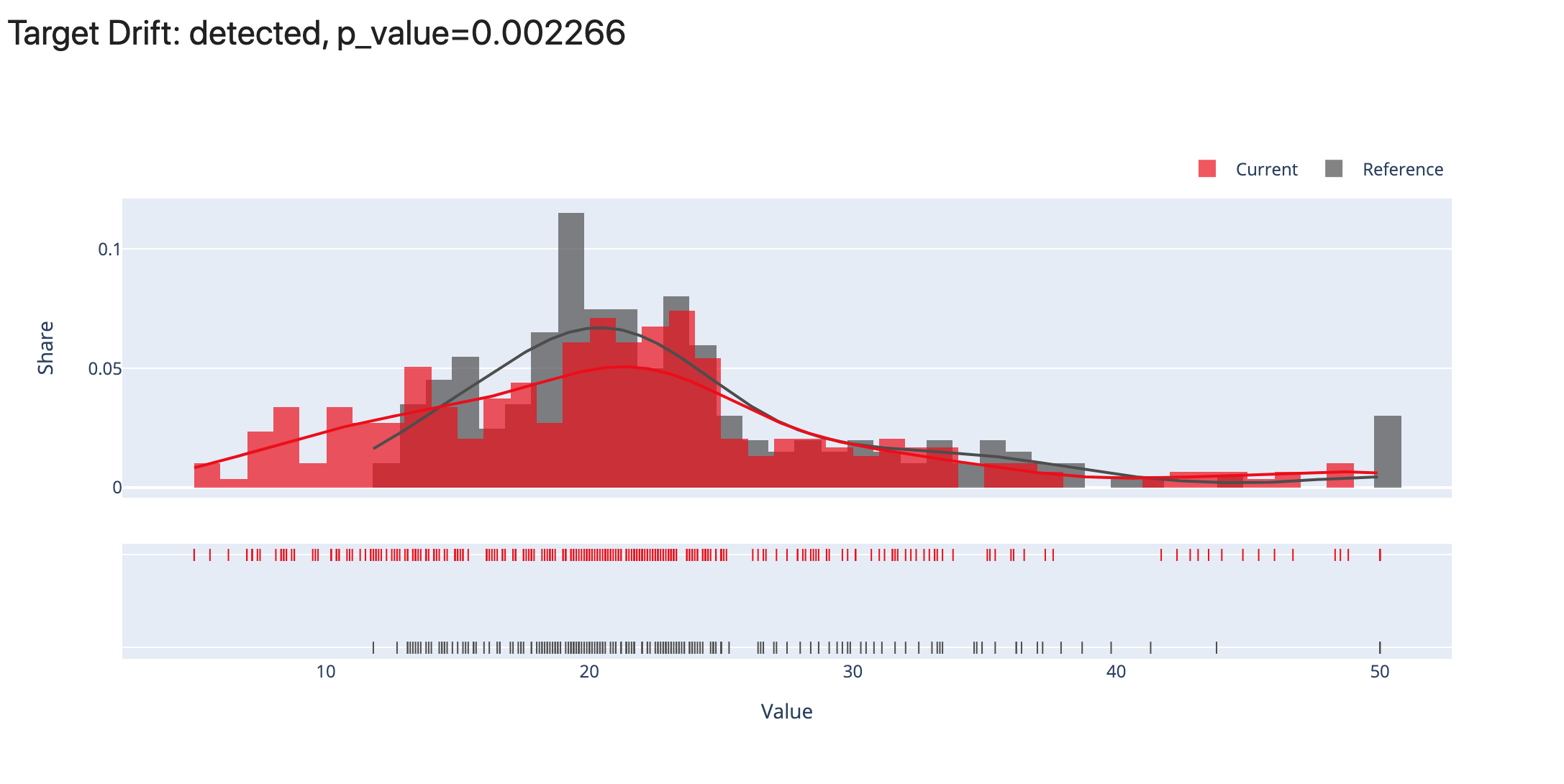

- Target Drift:

Numerical: The Target Drift report assists in identifying and investigating changes to the target function and/or model predictions. The Numerical Target Drift report is appropriate for problem statements with a numerical target function, such as regression, probabilistic classification, and ranking, among others. To run this report, you must have available input features, target columns, and/or prediction columns. You'll require two datasets. The benchmark dataset is the reference set. By comparing the current production data to the reference data, they analyze the change.

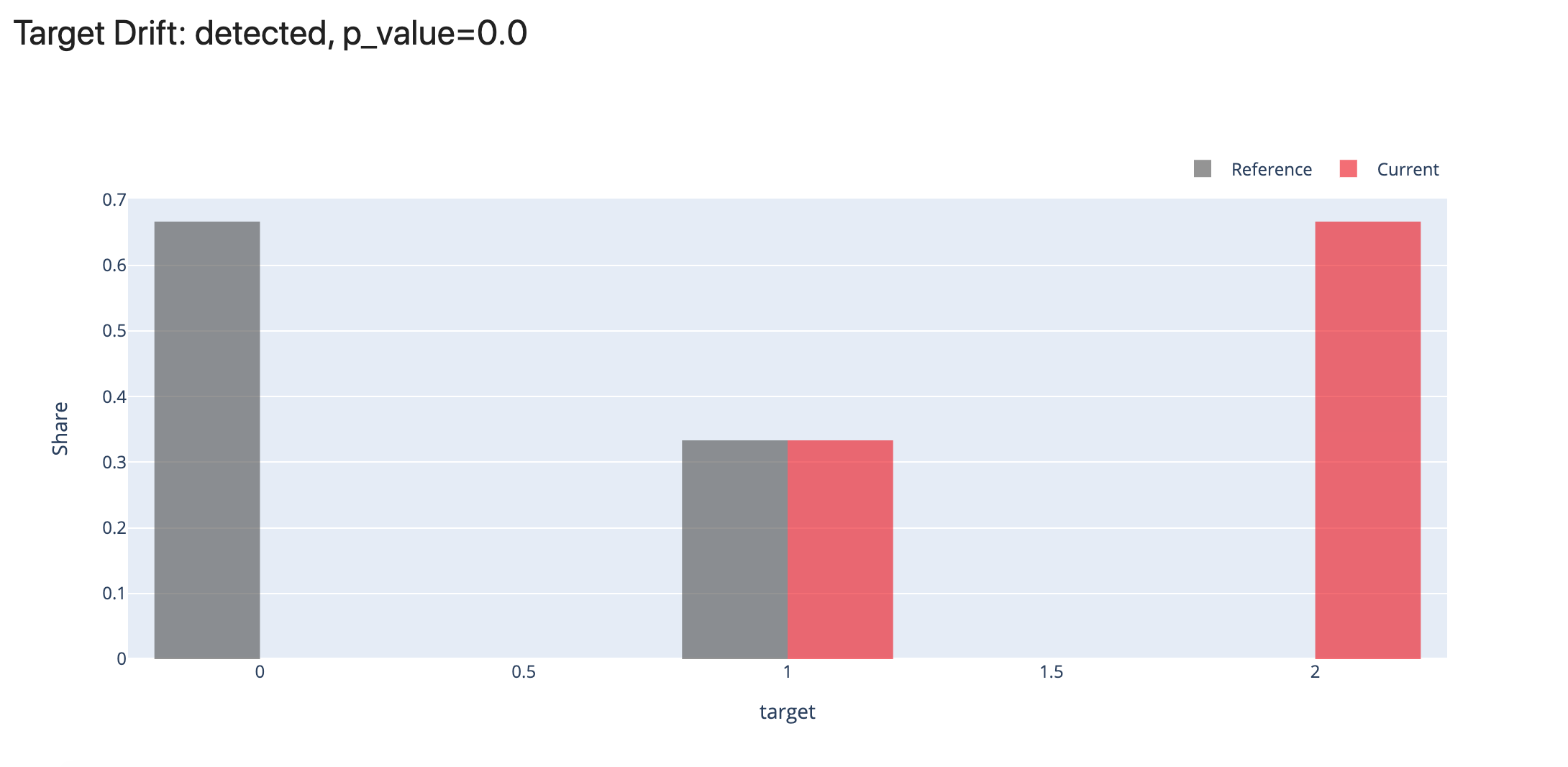

Categorical: Categorical Target Drift is appropriate for problem statements with a categorical target function, such as binary classification, multi-class classification, etc. To run this report, you must have available input features, target columns, and/or prediction columns. You'll require two datasets. The benchmark dataset is the reference set. By comparing the current production data to the reference data, they analyze the change.

- Model Performance:

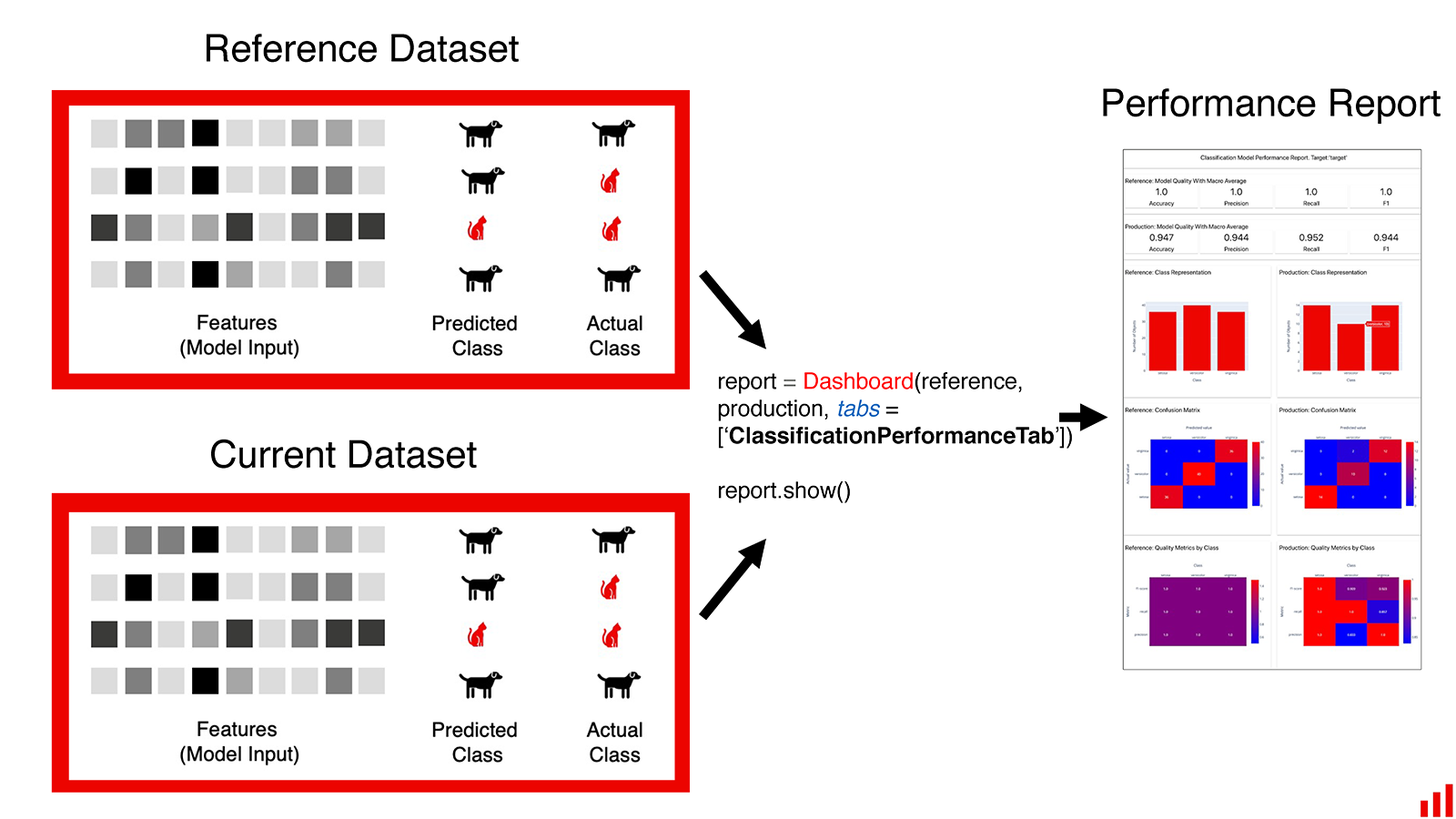

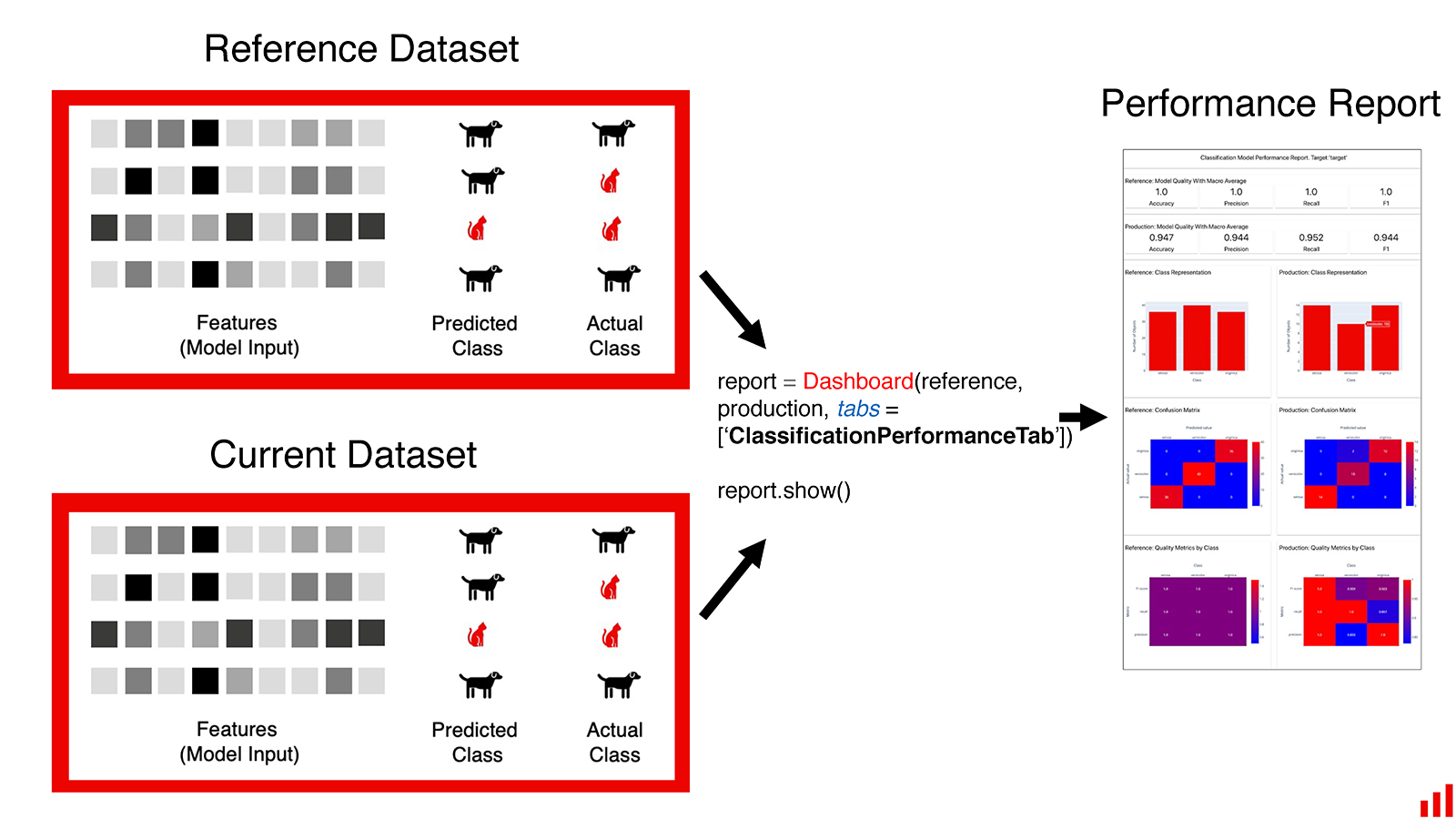

Classification: Classification Performance report evaluates a classification model's quality. It is applicable for both binary and multiclass classification. This report can be generated for a single model or as a comparison between two models. You can compare the performance of your current production model to that of a previous model or an alternative model. To run this report, you must have available input features, target columns, and prediction columns.

Probabilistic Classification: The Probabilistic Classification Performance report evaluates a probabilistic classification model's quality. It is applicable for both binary and multiclass classification. This report can be generated for a single model or as a comparison between two models. You can compare the performance of your current production model to that of a previous model or an alternative model. To run this report, you must have available input features, target columns, and prediction columns.

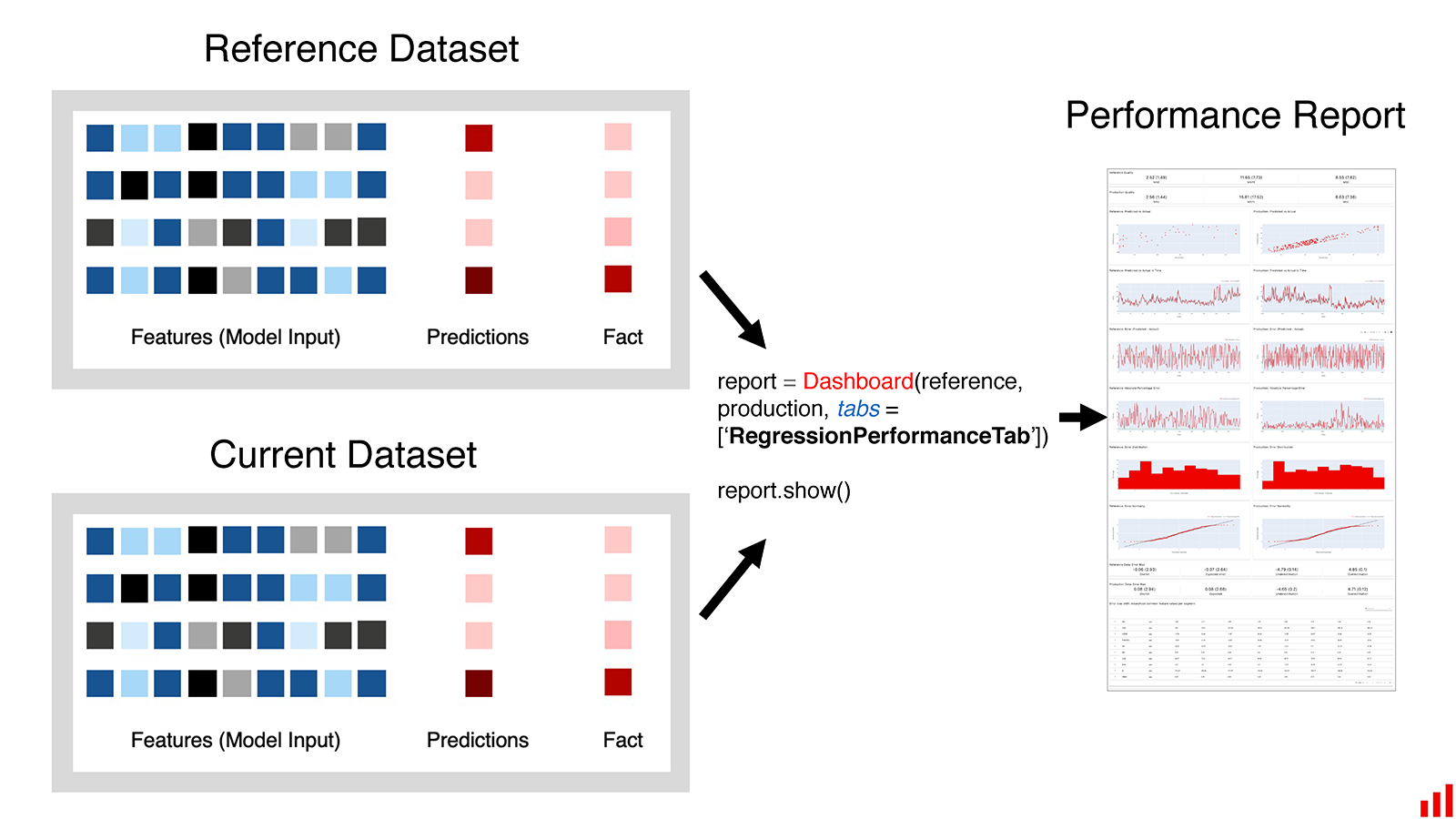

Regression: The Regression Performance report evaluates a regression model's quality. It can also compare it to the performance of the same model in the past or to the performance of a different model. To run this report, you must have available input features, target columns, and prediction columns. To generate a comparative report, two datasets are required. The benchmark dataset is the reference set. By comparing the current production data to the reference data, they analyze the change.

Again, I highly recommend reading their blog posts for more details. We just reviewed a small portion here. Now let's see a small demo of data drift and numerical target drift on California Housing dataset:

import pandas as pd

from sklearn.datasets import fetch_california_housing

from evidently.dashboard import Dashboard

from evidently.pipeline.column_mapping import ColumnMapping

from evidently.dashboard.tabs import DataDriftTab, NumTargetDriftTab

from evidently.model_profile import Profile

from evidently.model_profile.sections import DataDriftProfileSection, NumTargetDriftProfileSection

%matplotlib inline

import warnings

warnings.filterwarnings('ignore')

warnings.simplefilter('ignore')

ca = fetch_california_housing(as_frame=True)

ca_frame = ca.frame

ca_frame.head()

target = 'MedHouseVal'

numerical_features = ['MedInc', 'HouseAge', 'AveRooms', 'AveBedrms', 'Population', 'AveOccup',

'Latitude', 'Longitude']

categorical_features = []

features = numerical_features

column_mapping = ColumnMapping()

column_mapping.target = target

column_mapping.numerical_features = numerical_features

ref_data_sample = ca_frame[:15000].sample(1000, random_state=0)

prod_data_sample = ca_frame[15000:].sample(1000, random_state=0)

ca_data_and_target_drift_dashboard = Dashboard(tabs=[DataDriftTab(verbose_level=0),

NumTargetDriftTab(verbose_level=0)])

ca_data_and_target_drift_dashboard.calculate(ref_data_sample, prod_data_sample, column_mapping=column_mapping)

ca_data_and_target_drift_dashboard.show(mode='inline')